Fast and stable driving behavior prediction in the high-dimensional state space

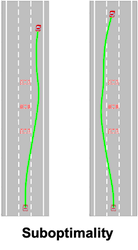

On the other hand, Rapidly exploring Random Tree (RRT) can conduct stable planning by creating a large tree that covers the whole space; however, covering high-dimensional state space to express car models requires a large computational cost. In addition, template-based RRT (Ma et al. ITSC2014) an extended method of RRT. While it can deal with nonholonomic dynamics with reducing the computational cost, there remains inefficiency of RRT planning and applying it to the high-dimensional space is difficult. For these reasons, we should resolve a trade-off between stability and speed of planning in order to predict driving behaviors.

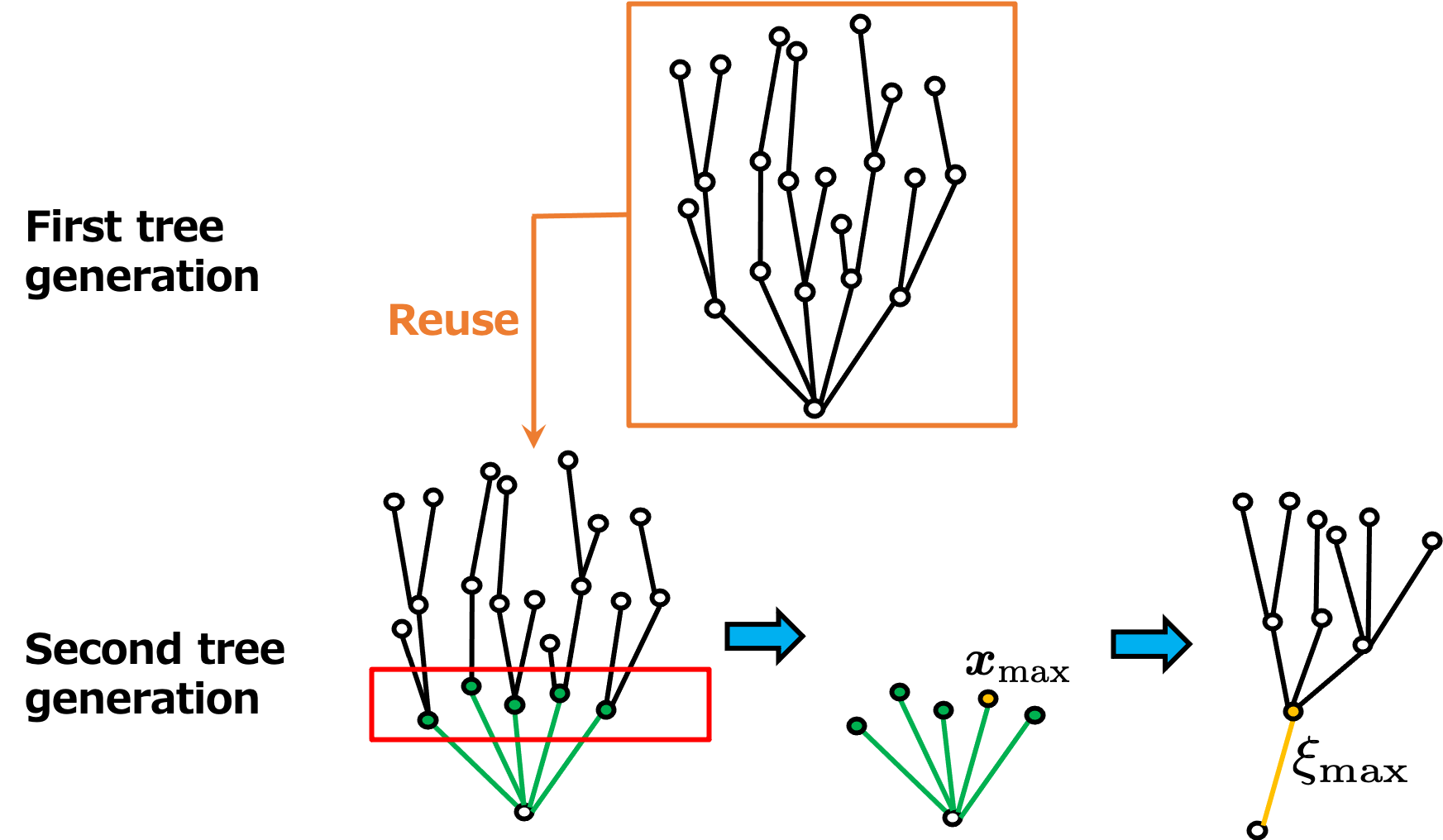

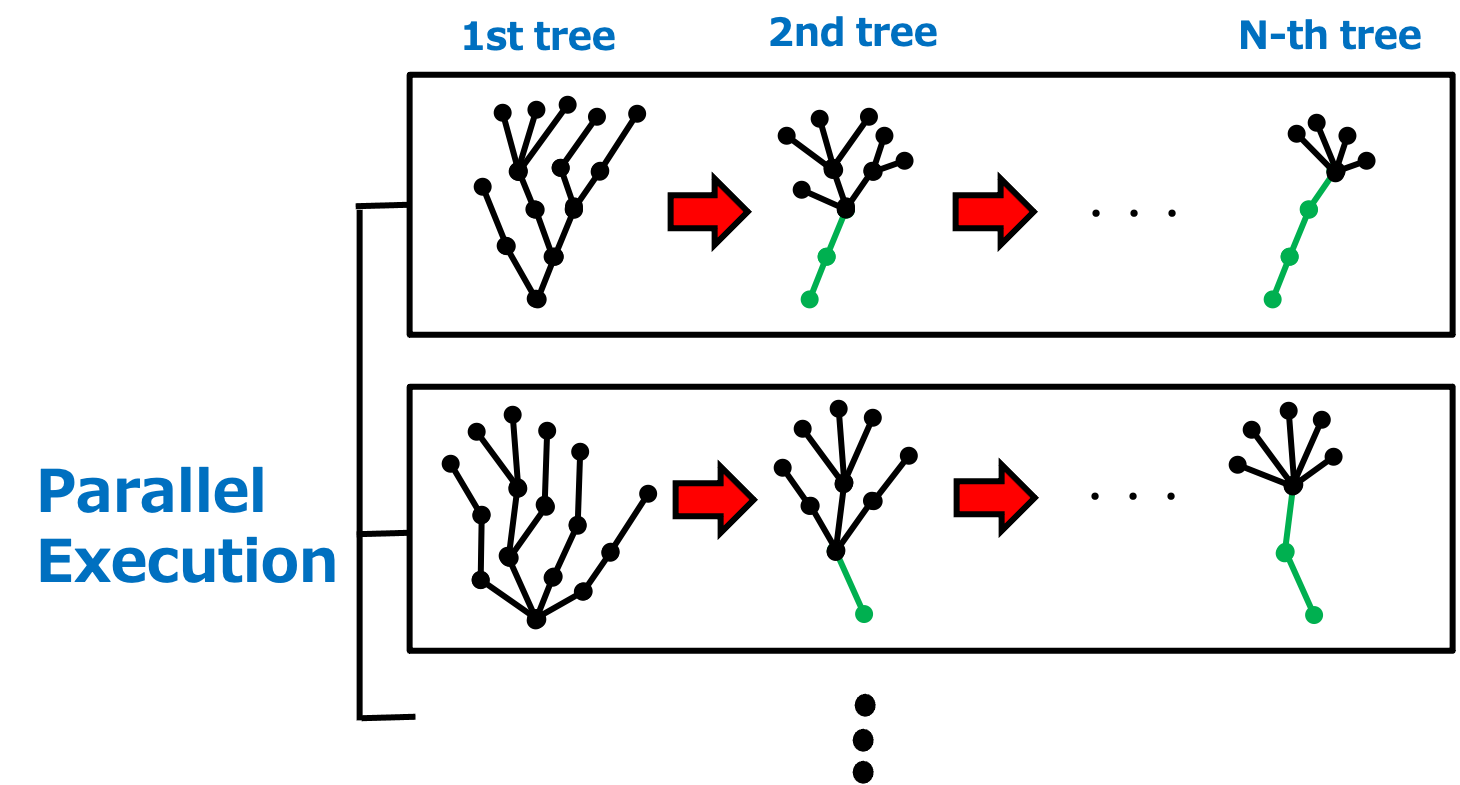

To deal with the problem, we proposed fast and stable template-based RRT (FASTEMLERRT) which consists of pruning and parallel multiple trees generation processes. By pruning unnecessary edges, it can focus on the specific part of space, where the high reward is expected.

Also, parallel generation of multiple trees can reduce the computational cost and efficiently cover a large area.

The combination of these two processes realizes stable and fast prediction in high-dimensional space. The experimental results on artificial highways and intersections show that our approach achieves rapid and robust planning in comparison with existing methods.

Inverse reinforcement learning for driving behavior modeling in the high-dimensional state space

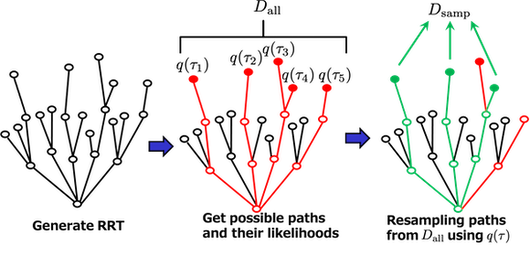

To resolve this problem, inverse reinforcement learning (IRL) is known as a prominent approach because it can directly learn complicated behaviors from expert demonstrations. Driving data tend to have several optimal behaviors because of their dependency on drivers’ preferences. To capture these features, maximum entropy IRL has been getting attention because its probabilistic model can consider suboptimality.

However, maximum entropy IRL needs to calculate the partition function, which requires large computational costs. Thus, we cannot apply this model to a high-dimensional space for detailed car modeling. In addition, existing research attempts to reduce these costs by approximating maximum entropy IRL; however, a combination of efficient path planning and the proper parameter updating is required for an accurate approximation, and existing methods have not achieved them.

In this study, we leverage RRT motion planner, and efficiently sample multiple informative paths from the generated trees. Also, we propose novel RRT-based importance sampling for an accurate approximation. These two processes ensure a stable and fast IRL model in a large high-dimensional space.

Experimental results on artificial environments show that our approach improves stability and is faster than the existing IRL methods.

Publications

逐次枝刈り型RRTの並列化による高速かつロバストな運転行動予測

細馬 慎平, 須ヶ﨑 聖人, 竹中 一仁, 平野 大輔, 孫 理天, 下坂 正倫

第38回 日本ロボット学会学術講演会 予稿集, オンライン開催, 10 2020

RRT-based maximum entropy inverse reinforcement learning for robust and efficient driving behavior prediction.

Shinpei Hosoma, Masato Sugasaki, Hiroaki Arie, and Masamichi Shimosaka.

2022 IEEE Intelligent Vehicles Symposium (IV 2022), pp. 1353-1359, Aachen, Germany, 6 2022.