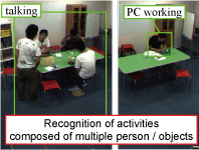

Recognizing daily activities from lifelog images is useful for intelligent human support systems.

Since the activities have multiple components such as persons or objects, traditional computer vision techniques (e.g. Dalal-Triggs HOG pedestrian detector) cannot handle them properly.

In this work, we employ discriminative deformable part models, which are collections of appearance models of the whole activity and activity components.

The models compute matching scores while moving their parts, so they are robust for position changes of persons or objects.

The experimental result shows the proposed method recognizes the activities such as “talking” robustly from lifelog images captured by a static camera.